What is the supply chain behind an AI company? (part 1)

Recently, I’ve been debating whether to view Artificial Intelligence as merely a technology industry or a broader industrial sector in its own right. While analyzing the companies driving the current hype cycle, I realized that behind every major AI interface, there is a massive, complex supply chain.

While economists and investors debate an "AI bubble" or even an "everything bubble", I want to steer clear of stock prices and market caps. Instead, I am interested in the nuts and bolts: the companies, components, and infrastructure lurking behind the flashy user interfaces.

To explore this, I decided to map out the ecosystem. (Full disclosure: I also wanted an excuse to use Napkin.ai to create some visuals, and the results were stunning).

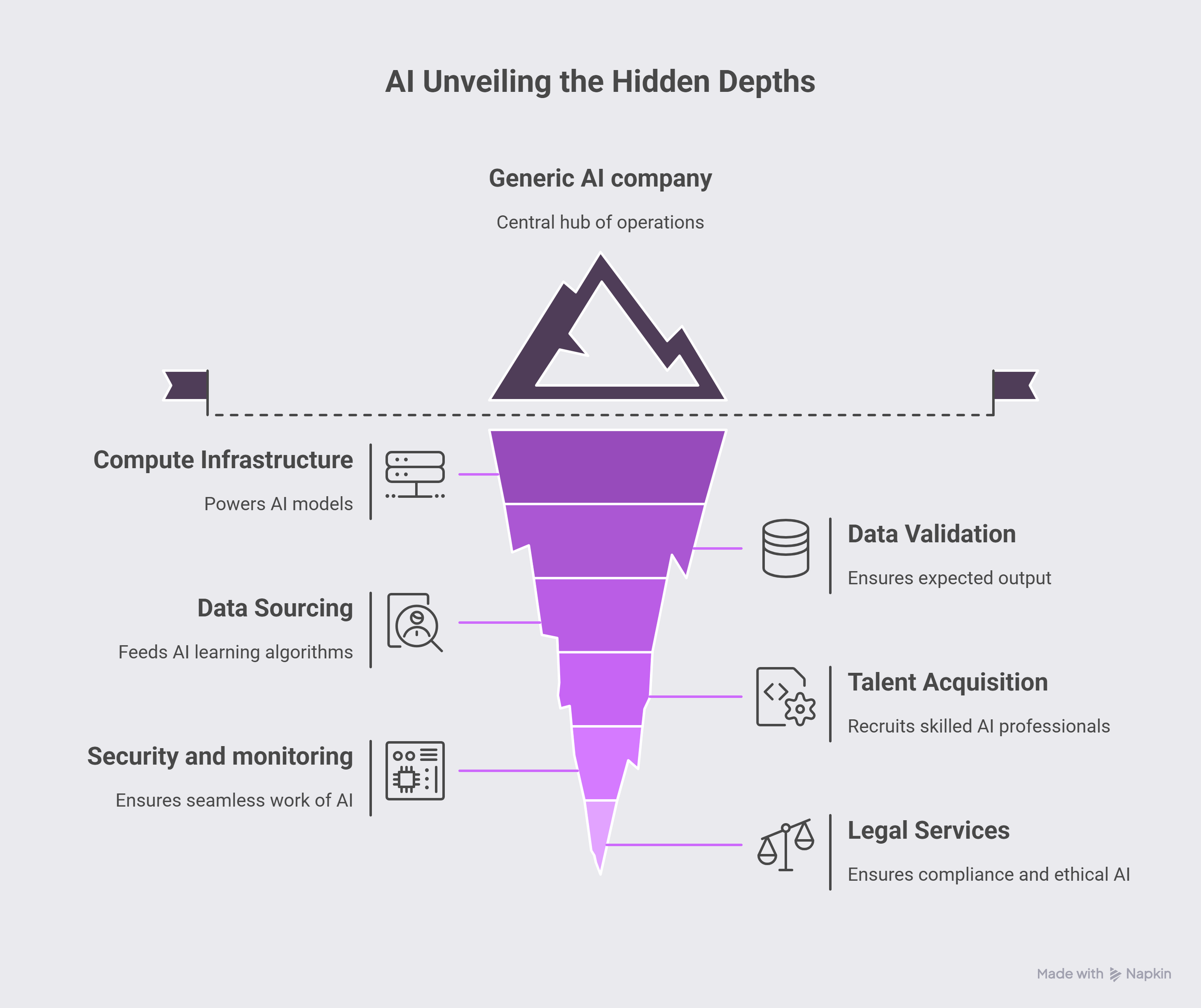

The AI Supply Chain: A Breakdown

Here is how I have categorized the critical contributors to the AI ecosystem:

Compute Infrastructure & Hardware: This is the physical foundation of AI. Without these distinct layers, no model can be trained or run.

Component Suppliers: This includes the manufacturers of GPUs, CPUs, TPUs, and advanced memory. These physical units are the engine room required to process input and generate output.

Data Center & Networking: The physical racks, cabling, and massive storage facilities that house the chips.

Energy & Environment: AI processing consumes vast amounts of electricity and generates significant heat. This layer includes energy providers and the specialized cooling infrastructure required to keep data centers operational.

Hyperscalers & Cloud Providers: These companies continuously aggregate the hardware above and rent it out as a service. They provide the "Compute on Demand" that allows AI companies to scale without owning their own physical servers.

The Data Layer: Large Language Models (LLMs) thrive on massive datasets. This layer is harder to categorize, but generally splits into four sources:

Web Crawling Services: Companies that scan the open internet to collect publicly available data.

Data Licensing: Organizations that own proprietary archives (like news corps or image libraries) and license them for training.

Proprietary/Internal Data: Data collected directly through user interactions with a company’s own products.

Academic & Research: Specialized datasets provided through partnerships with universities or scientific institutions.

Data Preparation Pipeline: Once data is collected (Input) and hardware is ready (Processor), the data must be refined before it enters the model (output).

Validation: Ensuring accuracy and consistency, correcting errors, and removing duplicates.

Transformation & Tokenization: Converting raw text or images into mathematical formats (tokens) that the model can understand.

Filtering: Removing toxic, biased, or irrelevant data to ensure safety and quality.

Augmentation: Artificially expanding datasets by creating modified versions of existing data to improve model robustness.

Software Tools & libraries: Utilizes a variety of software tools and libraries to develop and deploy its models.

Human Talent: Despite the narrative that AI replaces human work, the supply chain relies heavily on skilled labor.

Research & Engineering: The data scientists, ML engineers, and architects building the systems.

The Support Cast: Ethicists, policy experts, and trainers.

Reinforcement Learning from Human Feedback: The vast, often invisible workforce that manually rates AI responses to "teach" the model to be helpful and safe.

Security & Governance As models handle sensitive data, a safety net is required.

Security Providers: Defenses against adversarial attacks and data breaches.

Monitoring & Auditing: Real-time logging of system health and vulnerability scanning.

Legal & Regulatory: Guidance on GDPR, CCPA, and copyright law to ensure the "factory" doesn't get shut down.

Final thoughts

Using Napkin.ai to visualize this was a great experience, though I found that the topic of a "total supply chain" is incredibly complex for a single diagram. I initially wanted a massive mind map connecting every supplier, but the tool is better suited for storytelling flows than complex network topology.

I plan to explore the specific connections in Part 2. It is vital we understand this chain because it reveals the fragility of the system. Imagine if one semiconductor plant halts production, or if a specific rare earth metal becomes scarce. In this supply chain, a bottleneck upstream inevitably leads to a price hike downstream.