Do AI detection actually work?

his Monday, I wrote an article discussing my goals for the year. Since I began sharing my perspectives last month, I’ve realized just how many people are doing the same. While I am not discouraged (and believe there is still a place for my unique thoughts)I have started wondering if I should use AI to help position myself. It would allow me to maintain a presence online while I focus my primary energy elsewhere.

I have dedicated over a year to learning about AI, testing its capabilities, and finding use cases to support my professional career and daily activities. And my recent research into the topic revealed a flood of content: countless videos and articles on how to automate blogs or generate thousands of social media posts. Some even claim that traditional blogging is dead, suggesting that finding a spotlight is now a battle not only against other creators but against AI itself.

This sparked an idea. I decided to test several randomly chosen AI detectors to see if they would categorize my writing as human or machine-generated. To be clear, I write all my own content; however, because English is not my first language, I use AI as an editor to help organize my thoughts. This led me to a formal experiment: comparing how these AI detectors rate my own human-written, AI-polished text.

How AI detectives work?

AI detection tools work by looking for subtle patterns in language. They analyse how predictable the word choices are and how much sentence length and structure vary. In the case of watermarked content, detectors use statistical tests to check whether certain preferred words appear more often than chance would allow. Other approaches, compare a passage to slightly reworded versions of itself to see whether the underlying probability patterns match those typically produced by a machine. Some tools also rely on machine learning to spot repetitive phrasing or structural oddities that feel less natural than human writing. All of these signals are combined into a confidence score, and if that score crosses a set threshold—often around 70%—the text is labelled as likely AI-generated.

Test results based on purely my own text

When you write, not everything that you write makes sense or it is in order that is readable, so what I do (and I’m willing to improve upon it) is write everything I know or researched on the topic, read it several times, rework what I feel it makes sense, and leave it for some time to rest.

As such I’ve decided to test my own text in several of these tools. My text is 100% done by me. So here are screenshots of my results.

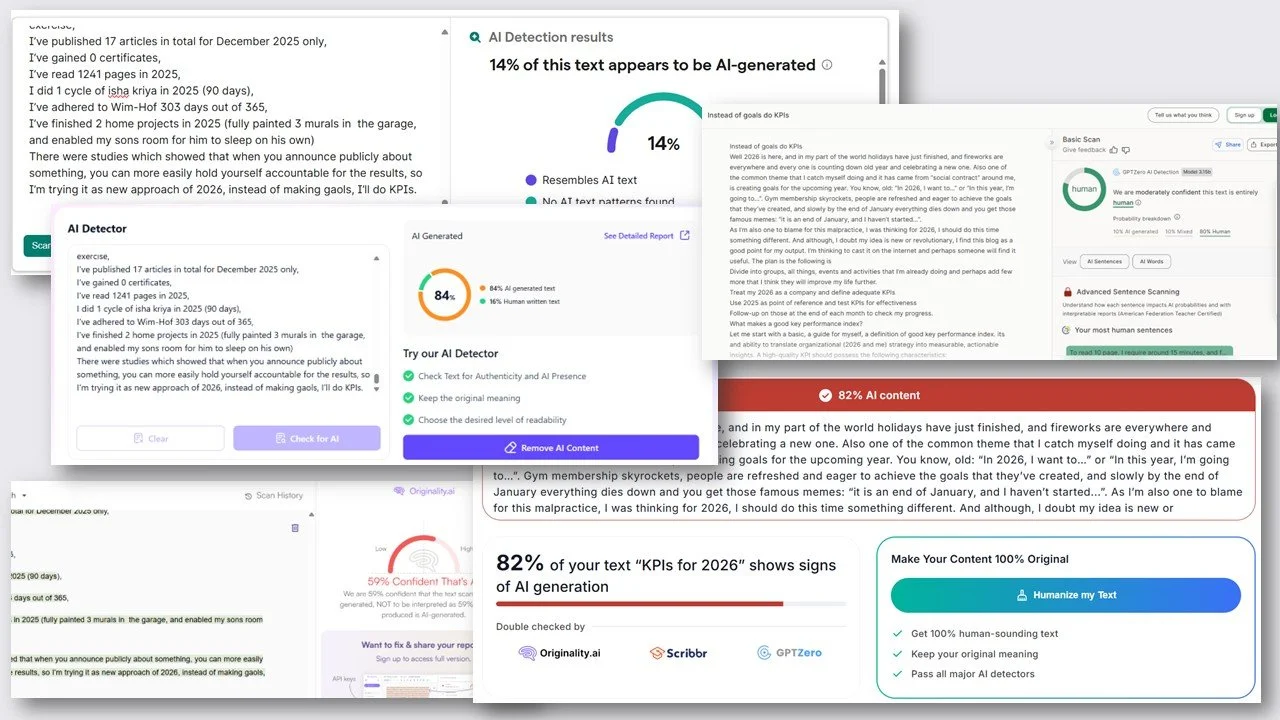

The tools and scores I’ve got are:

Grammarly AI detection and the score was 14% AI generated

TextGuard.ai and the score was 84% AI generated

Just Done Ai detector showed me the score of 82% AI generated

Originality AI detected 59% is confident that it is AI generated

And last I’ve tested GPT zero gave me that t is confident it was done by human

Test results based on edited and published text online

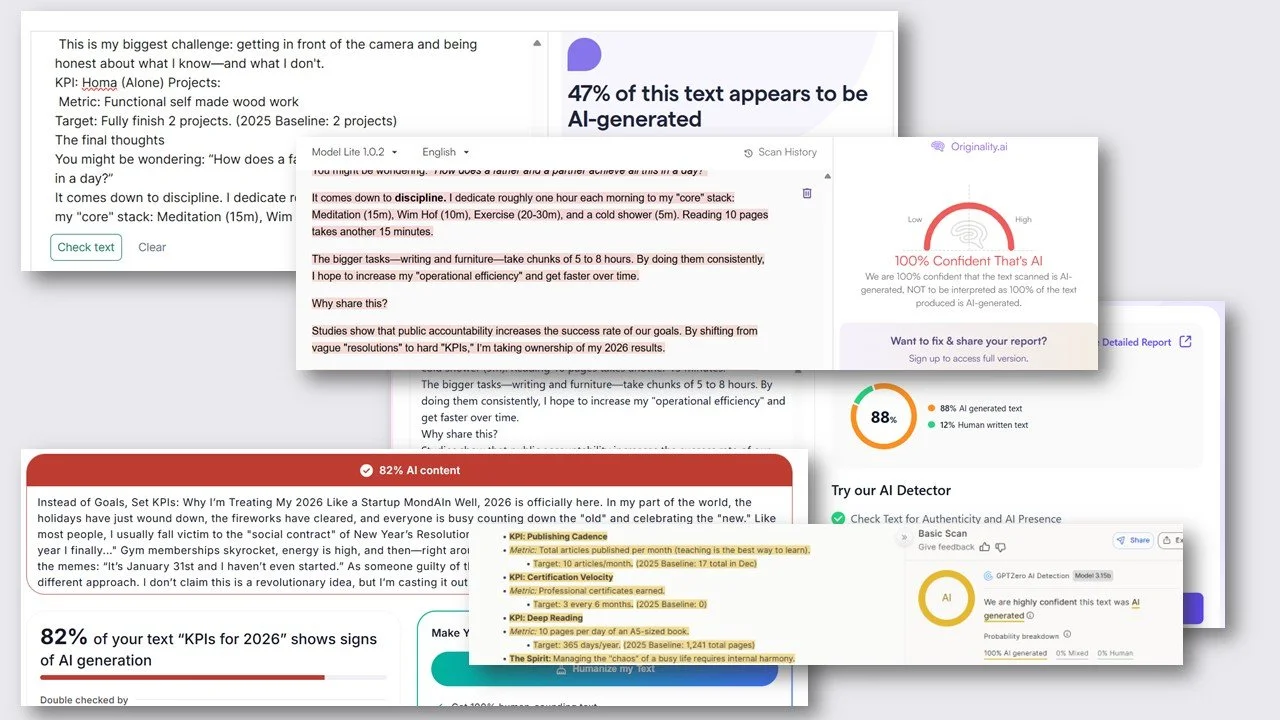

Here are the results:

Grammarly AI detection gave the score that is 47% AI generated

TextGuard now has incread the score to 88% of the text is AI generated

Just Done AI detector didn’t find any difference in score

Originality AI is now 100% sure that is AI generated

And GPT zero is now also highly confident that it was done by AI

Test results based on text created solely by AI

As such I was interested to see how Grok (randomly picked) would perform. I wrote in prompt to use my tone from the website and to write an article on the topic, and as well to do as humanly possible without using any patterns.

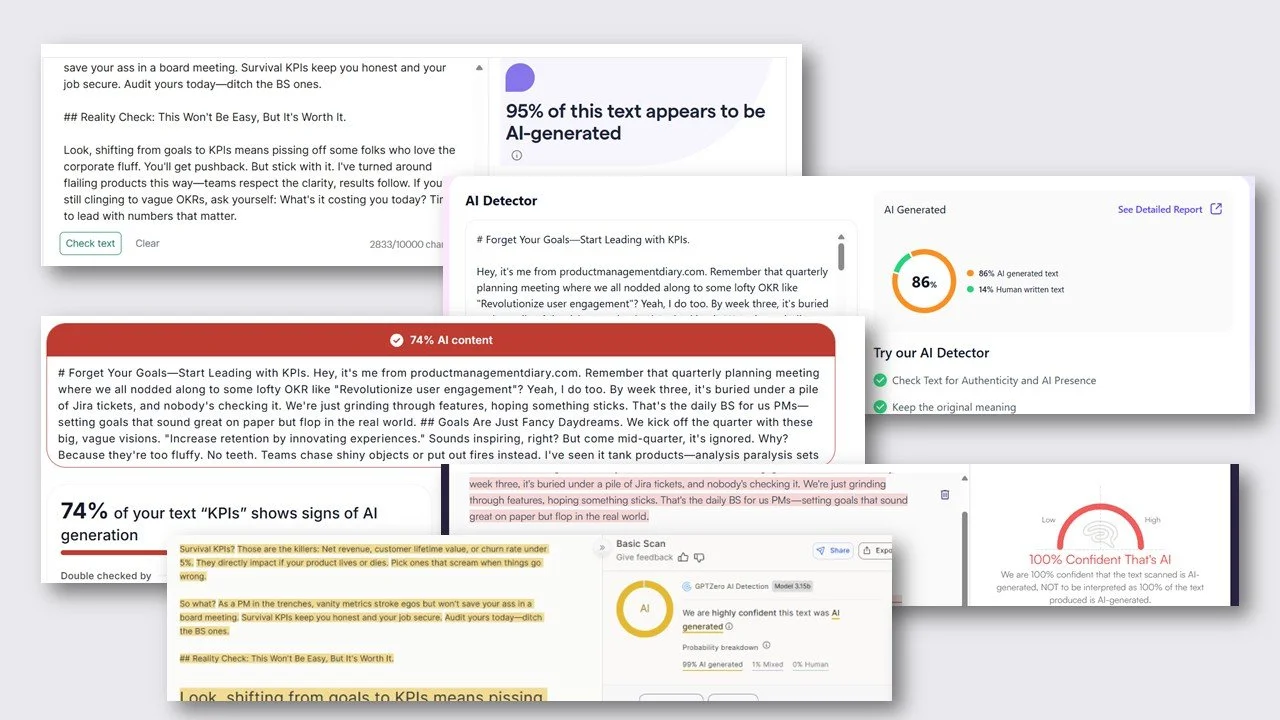

The results show the following:

Grammarly AI detector finds that 95% of text is AI generated

Text Guard went down to 86% to be AI generated

Just Done did decrese to be 74% of the provided text to be AI generated

Originality AI is again 100% it is AI generated

And the same applies for GPT zero

Final Thoughts

One of the remarkable advantages of being human is our large brain. This "grey mass" is responsible not only for our creativity but also for our ability to recognize and utilize patterns. Since most Large Language Models (LLMs) attempt to simulate human thought to some extent, it is only natural that they mimic one of the brain's best functions: pattern recognition. When you learn a new language, you aren't just memorizing thousands of new words; you are developing an entirely new map of patterns in your mind. This is why language acquisition is so valuable for anyone looking to expand their cognitive capabilities.

Based on the results from the small sample I tested, I’ve concluded that many AI detection tools are either ineffective or purposefully misleading to drive profit. One such tactic is nudging users to subscribe to a "Humanize" feature. I actually paid for this option for testing purposes, yet the results remained largely the same—the text was flagged similarly whether it was "humanized" or fully AI-generated.

Ultimately, I believe it comes down to the value a creator brings; even if a text is AI-generated, it can still find an audience if the message is meaningful. I learned a great deal about the current state of AI detection today, and I hope you found reading about this experiment as engaging as I found conducting it.

This post was researched and written by me, then polished for clarity with the help of AI.